Nipun Mehta, Apr 13, 2024 in Service Fellows

[TLDR: On April 27th, we're convening to share some exciting updates from different corners of the ServiceSpace AI ecosystem. RSVP here.]

AI is only as good as its data. Which then begs the question of what data actually is.

Back in 2017, in a talk on Algorithms and Intuition, I argued that computing algorithms that help us process "big data" should be coupled with intuitions that use our evolutionary carbon apparatus to tune into "deep data."

Today’s tech innovators tend to view deep data as a failure of our measurement tools -- a gap that bigger, faster, more pervasive tech would address. To some degree, that’s reasonable logic. In recent months, AI has upped the tally of our raw building materials from 38 thousand to ... 380 thousand! Yet, at what point does engineering become a hammer that turns everything into a nail? "Death bots" are now increasingly popular. Billions are now invested in the premise that aging and disease are just data-processing issues that can be solved with the right computing, data, and algorithms. Is it wise to project a facade of control over fundamentally impermanent phenomena?

For an increasing number of people, data-ism is feeling like religion. Is presence just unrealized information? Was Buddha just a proxy for the right data, intelligence and interventions that helped us get enlightened? Or is there a fidelity loss from presence to deep data to big data? Can collected datasets ever catch up to collective intelligence?

Right now, the AI revolution is barreling forward at break-neck speeds – without much reflection. For instance, ChatGPT arrived because of the web's common crawl data. Yet, "More than half of all websites are in English when more than 80% of the world doesn’t speak the language. Even basic aspects of digital life -- searching with Google, talking to Siri, relying on autocorrect, simply typing on a smartphone -- have long been closed off to much of the world." If language is the architecture of thought, and thought is the grammar for inner transformation, what is lost with this unexamined homogeneity? Will AI help us bridge such gaps (as it is more than capable of doing) or, in a frenetic frenzy for being the first to market, will it only accelerate and automate the status quo?

The default is clear – AI is a hungry ghost for data. That much is undisputed. Naturally, that’ll incentivize more and more extractive tools. Your Kindle will read you, as you read the book. A Barbie doll will track every sentence and feeling of a child. As problematic as this might sound, this is hardly the dystopian scenario. We’re going to run out of data -- GPT3 used 300 billion tokens, GPT4 used 13 trillion tokens, GPT5 will need 50T, by GPT7 we’ll need quadrillions. Even tracking every sensation of every form of life will be insufficient.

To remedy this, we're now paving the way for artificial data, often referred to as “synthetic” data. In seconds, we can create a unique headshot of a face that never had a birthday; with a simple text prompt, Udio can help create an original song with its own lyrics, composition and chords; similarly, Sora can instantly create a believable video of a couple of dogs doing a podcast in the Himalayas. Many multi-billion dollar companies are working on preserving the data and intelligence of our loved ones, so if someone passes away, you can still exchange texts and get contextual responses back. “Live Forever”, one of their taglines reads. And that's just one hop away from the meta-verse, where we interact with simulations and unending combinations of synthetic data that never expire.

To remedy this, we're now paving the way for artificial data, often referred to as “synthetic” data. In seconds, we can create a unique headshot of a face that never had a birthday; with a simple text prompt, Udio can help create an original song with its own lyrics, composition and chords; similarly, Sora can instantly create a believable video of a couple of dogs doing a podcast in the Himalayas. Many multi-billion dollar companies are working on preserving the data and intelligence of our loved ones, so if someone passes away, you can still exchange texts and get contextual responses back. “Live Forever”, one of their taglines reads. And that's just one hop away from the meta-verse, where we interact with simulations and unending combinations of synthetic data that never expire.

Let alone deep data, this isn’t even big data. It’s just noise, without any mooring. And noise, left unchecked, drops the signal.

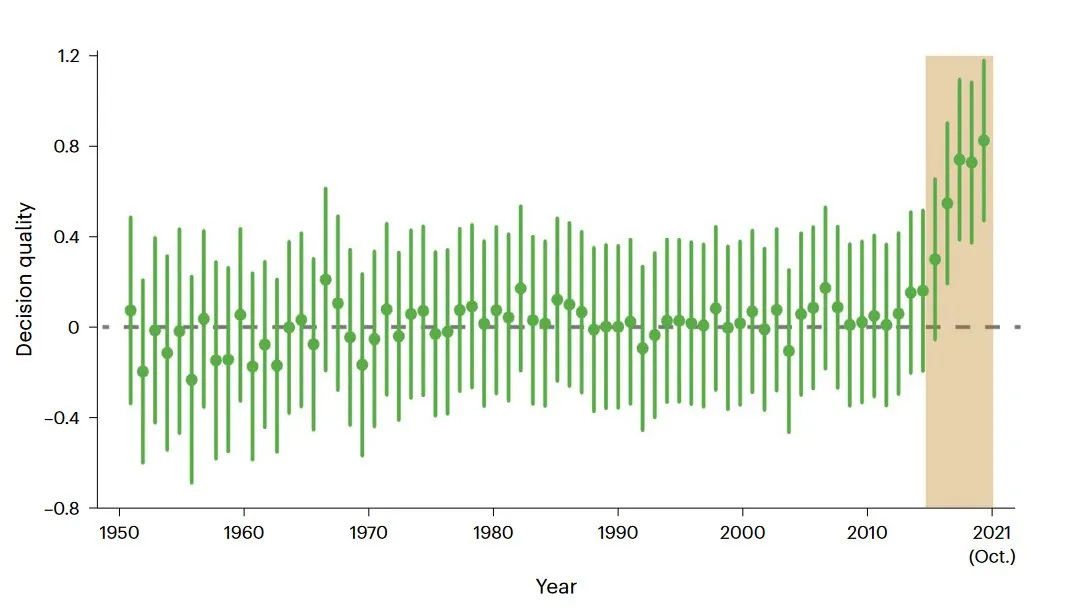

Consider Chess. In 1997, IBM's DeepBlue beat grand-master Garry Kasparov in chess, using a brute-force approach of evaluating 200 million positions per second. In 2016, Google's AlphaGo developed a combination of human and computing intelligence that was far superior than even DeepBlue. And in 2017, AlphaGo Zero spent three hours just playing itself trillion times, without *any* human data, and destroyed the best chess-player in the world 38-0. Human data first, then augmented by computed data, and then iterate over purely synthetic data.

Same thing happened with the much more complex game of Go. And now that logic is coming for all human experiences. OpenAI used the common crawl of the entire Internet to start, then used $2/hr human labor from Kenya to accelerate its way from GPT3 to GPT4, and the upcoming GPT5 likely won't require human labor at all.

How, then, will we keep the flame of the sacred alive in a synthetic world?

That’s the crossroads that AI puts us at. It’s an exciting collective moment, actually, because it’s a head-on collision with life’s most pressing questions – who actually am I? What do I know to be true beyond my thoughts and sensory experiences? What about my existence can't be hacked and manipulated?

If humanity confuses and conflates big-data with deep-data, AI will become the almighty hungry ghost and with us as its puppets. We’re already half-way there – it’s no longer just an online video, but YouTube’s subversive “you will also like” recommendations to Netflix “binging” to MineCraft’s invitation to live inside the video game, to Microsoft's augmented reality to Zuckerberg’s Meta Verse. The writing is on the wall.

Yet, if we can filter signal from noise and discern the nuance, as we are very capable of doing, AI can augment new pathways to human flourishing. If spiritual is simply defined as someone who has a relationship to deep data, it’s time for such spiritual people to get active. And for active people to get spiritual.

Enter ServiceSpace AI.

At a baseline level, we have to preserve uncommon datasets. We’ve had 25 years of high-quality annotated ServiceSpace inspiration from the get-go. So many authors have come on board. Pom, a leader of a Winnemem Wintu Native American tribe, joined our Gandhi 3.0 retreat in January and is piloting an indigenous bot. Rick at BatGap has inspired many dozens of prominent spiritual teachers (also guests on his podcast) to engage. Preeta is in conversation with a group that has the rights to all of J. Krishnamurti’s content. Victor inspired Mingyur Rinpoche and his team to join our platform, as Tim has also sent the word to the Dalai Lama’s office. Leaders of Vinoba Bhave's (Gandhi’s successor) movement have given us all the content that powers the VinobaBot. Last month in Germany, Wakanyi gathered elders from a dozen countries to explore how their oral traditions can be preserved in an AI world. Tapan and many of his PhD students are actively pursuing non-English datasets. Michael Lerner's herculean effort to include alternative therapies around cancer treatments is part of a Cancer Bot. A lot is underway.

In terms of volume, this is hardly half a terabyte of data, but our intent isn’t to head towards big-data volume. It’s actually the opposite – small data. If Large Language Models (like GPT4, Claude, Gemini, Llama, Mistral) are focused on horizontal datasets, we are going for the vertical data sets.

Secondly, that small data is stronger if it is connected in a polyculture field. In our pilot program, about 50 authors – like Sharon Salzberg, Peter Russell, Jeffrey Mishlove, Jem Bendell – joined our platform. That’s significant because our platform plays by our non-commercial rules. :) With just a click of a button, any author can make their “vertical” data sets available to everyone else on the platform. And with all these shareable datasets of wisdom, you can create unending unique combinations of intelligences. The strength lies not in the static data, but in how the dynamic data is related to each other to spawn off co-creative intelligences.

Thirdly, as these vertical datasets start forming myriad expressions of values-oriented intelligence, we can start creating many different applications. ChatBots are popular these days, but that’s just one kind of an app – and it privileges people who can articulate the right questions to ask. As we proceed, there will be millions of different apps. Within ServiceSpace operations, we have created many AI tools that radically reframe how we manage our engagement spectrums, from DailyGood to Pods, and how we imagine new volunteer opportunities. Our team in India is building a “decelerator” that aims to decelerate the big-data momentum and accelerate deep-data wisdom in myriad ways. Much of this is experimental right now, but there’s a lot of excitement about its potential.

All three of those phases are critical and pioneering, and I’m delighted that ServiceSpace has found itself playing a role at ground zero of AI evolution. While that is necessary, it is not sufficient.

What ServiceSpace truly brings to the table is the wisdom of intrinsic motivations. I often joke that ServiceSpace is nature-funded; that if you do a small act of service, nature will reward you with endorphins, dopamine, serotonin and oxytocin. That’s why we’ve been able to mobilize millions of volunteer hours over 25 years. The moment nature changes its algorithm not to regenerate such virtues, ServiceSpace will cease to exist. :) The marketplace, on the other hand, leans on extrinsic rewards to win zero-sum games of self-maximizing transactions. If that becomes the only game in town, AI will handily surpass us, and as Yuval Harari notes, we might give birth to a “useless class.” If, however, market forces can do their big-data magic while embedded in a larger cocoon of deep-data wisdom, AI could greatly augment the arc of our evolution – where technology innovations don’t shrink our spheres of connection into synthetic data, but actually help us cultivate inner transformation and grow our humanity.

What does nature-funded AI look like?

That’s a hard question, because it requires insight at the intersection of 3 C’s – computing, community and compassion. It’s hard to find Silicon Valley techies, front-line Gandhians, and Himalayan yogis in the same room. :) Yet, that’s what is needed to catalyze, scale and regenerate the possibilities of natural wisdom.

At the UN last week, I summarized our latest learnings via these three points:

- AI will far exceed individual (intellectual) intelligence. It’s inevitable.

- AI cannot include heart intelligence. It’s impossible.

- AI will be far inferior to collective heart intelligence. Always.

From where I stand, it’s quite clear that AI will surpass human beings in the marketplace. Today’s rudimentary, probabilistic AI will soon get to AGI (Artificial General Intelligence, capable of thought), which will then arrive at ASI (Artificial Super Intelligence, smarter than all human existence) – which Elon Musk predicts will happen in the next three years.

Archived knowledge, however, has never been the hallmark of human ingenuity. AI relies on data sets, which are quantified archives of the past. The past is certainly important, but humanity excels in the emergent present -- living into an unknowable emergence that is co-created in the space in between two dynamic entities. We are capable of relating to the unknowable, in a way that allows for a high-bandwidth input into the present moment. We are capable of watching a sunrise with an unconditioned mind, *before* it turns into an archived experience for our senses and mind (and AI) to make meaning. That is the foundation for heart intelligence.

Such heart intelligence starts with personal coherence, then effortlessly expands in social coherence and dissolves into ever-expanding spheres of cosmic coherence. It’s not a linear line, though. Cosmic flows through social and personal, just as social affects the cosmic and personal. That organic expansion and contraction necessarily blurs the boundaries of our identity, and forms the roots of collective heart intelligence. Ancients have labeled it by a thousand names, from the field of consciousness to the dance of emptiness to the Tao. Like water around a fish, it’s everywhere around us – whether it is through a Redwood Tree’s angel rings, the synchronized fireflies at night or starlings during migration season, or even the optimized pathways of our microbiomes.

Maybe Minouche Shafik is right when she says, "In the past, jobs were about muscles. Now they’re about brains, but in the future, they’ll be about the heart." Not just for jobs but life.

The AI evolution, at its best, invites us to elevate our finite zero-sum games to a much more infinite game. Srinija, who vice-chaired Stanford’s board and its Human-Centered AI board, sums it up nicely: “AI is a great mirror of the past, not an oracle for our future.” We know that AI will process and synthesize datasets into stunning answers, but it remains to be seen if it can help humans ask better questions that help us live into a creative, unknowable, and emerging future.

When AlphaZero pummeled the world’s best Go player, Lee Sedol, with creative moves that humans had never seen before, you might expect that we’d stop playing Go altogether. Alas, humans started innovating in a way they hadn’t done in decades, when an open-source Go engine, Leela Zero, showed AI's reasoning.

AI reversed our collective stagnation. It pushed us to learn and evolve in a new way. Can AI reveal new "moves" that push us to become more caring and compassionate?

Will we be able to resist the short-lived convenience of a low-grade stagnation inside a simulation of synthetic data? Or will AI enliven into new realms of human possibility?

Howard Thurman used to say, “Don’t ask yourself what the world needs. Ask yourself what makes you come alive, and go do that, because what the world needs is people who have come alive.” Our noble friends are those who remind us of our deepest aspirations, which truly make us come alive. Can AI be a noble friend? Instead of delivering answers for glossy  solutions to our suffering, perhaps AI can raise questions for a dip into the ocean of collective heart intelligence. Perhaps we can use this moment in our evolutionary arc to regenerate collective wisdom -- and throw a better party. :)

solutions to our suffering, perhaps AI can raise questions for a dip into the ocean of collective heart intelligence. Perhaps we can use this moment in our evolutionary arc to regenerate collective wisdom -- and throw a better party. :)

A lot remains unknown, but experiments are underway. And on April 27th, we invite you to help co-create that better party. :) A few of us are convening to share some exciting updates from different corners of the ServiceSpace AI ecosystem, and heartstorm how our shared values might accelerate different dimensions of its future arc. RSVP here.

Thank you for bridging the certainty of big-data with the mystery of deep-data, in a way that keeps unconditioned creativity alive.